update

This commit is contained in:

79

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

Normal file

79

.github/ISSUE_TEMPLATE/bug_report.yml

vendored

Normal file

@ -0,0 +1,79 @@

|

||||

name: Bug report

|

||||

description: Create a report to help us improve

|

||||

labels: bug

|

||||

|

||||

body:

|

||||

- type: textarea

|

||||

id: description

|

||||

attributes:

|

||||

label: Describe the bug

|

||||

description: |

|

||||

A clear and concise description of what the bug is.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: steps-to-reproduce

|

||||

attributes:

|

||||

label: Steps to reproduce

|

||||

placeholder: |

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: expected-behavior

|

||||

attributes:

|

||||

label: Expected behavior

|

||||

description: What do you expect to happen?

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: operating-system

|

||||

attributes:

|

||||

label: Operating system

|

||||

placeholder: Windows 11 Version 21H2 (OS Build 22000.1574)

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: python-version

|

||||

attributes:

|

||||

label: Python version

|

||||

placeholder: "3.11.1"

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

id: miner-version

|

||||

attributes:

|

||||

label: Miner version

|

||||

placeholder: "1.7.7"

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: other-environment-info

|

||||

attributes:

|

||||

label: Other relevant software versions

|

||||

- type: textarea

|

||||

id: logs

|

||||

attributes:

|

||||

label: Logs

|

||||

description: |

|

||||

How to provide a DEBUG log:

|

||||

1. Set this in your runner script (`run.py`):

|

||||

```py

|

||||

logger_settings=LoggerSettings(

|

||||

save=True,

|

||||

console_level=logging.INFO,

|

||||

file_level=logging.DEBUG,

|

||||

less=True,

|

||||

```

|

||||

2. Start the miner, wait for the error, then stop the miner and post the contents of the log file (`logs\username.log`) to https://gist.github.com/ and post a link here.

|

||||

3. Create another gist with your console output, just in case. Paste a link here as well.

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: other-info

|

||||

attributes:

|

||||

label: Additional context

|

||||

description: Add any other context about the problem here.

|

||||

1

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

1

.github/ISSUE_TEMPLATE/config.yml

vendored

Normal file

@ -0,0 +1 @@

|

||||

blank_issues_enabled: false

|

||||

31

.github/ISSUE_TEMPLATE/feature_request.yml

vendored

Normal file

31

.github/ISSUE_TEMPLATE/feature_request.yml

vendored

Normal file

@ -0,0 +1,31 @@

|

||||

name: Feature request

|

||||

description: Suggest an idea for this project

|

||||

labels: enhancement

|

||||

|

||||

body:

|

||||

- type: textarea

|

||||

id: description

|

||||

attributes:

|

||||

label: Is your feature request related to a problem?

|

||||

description: A clear and concise description of what the problem is.

|

||||

placeholder: I'm always frustrated when [...]

|

||||

- type: textarea

|

||||

id: solution

|

||||

attributes:

|

||||

label: Proposed solution

|

||||

description: |

|

||||

Suggest your feature here. What benefit would it bring?

|

||||

|

||||

Do you have any ideas on how to implement it?

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

id: alternatives

|

||||

attributes:

|

||||

label: Alternatives you've considered

|

||||

description: Suggest any alternative solutions or features you've considered.

|

||||

- type: textarea

|

||||

id: other-info

|

||||

attributes:

|

||||

label: Additional context

|

||||

description: Add any other context or screenshots about the feature request here.

|

||||

26

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

26

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

@ -0,0 +1,26 @@

|

||||

# Description

|

||||

|

||||

Please include a summary of the change and which issue is fixed. Please also include relevant motivation and context. List any dependencies that are required for this change.

|

||||

|

||||

Fixes # (issue)

|

||||

|

||||

## Type of change

|

||||

|

||||

Please delete options that are not relevant.

|

||||

|

||||

- [ ] Bug fix (non-breaking change which fixes an issue)

|

||||

- [ ] New feature (non-breaking change which adds functionality)

|

||||

- [ ] Breaking change (fix or feature that would cause existing functionality not to work as expected)

|

||||

|

||||

# How Has This Been Tested?

|

||||

|

||||

Please describe the tests that you ran to verify your changes. Provide instructions so we can reproduce. Please also list any relevant details for your test configuration.

|

||||

|

||||

# Checklist:

|

||||

|

||||

- [ ] My code follows the style guidelines of this project

|

||||

- [ ] I have performed a self-review of my code

|

||||

- [ ] I have commented on my code, particularly in hard-to-understand areas

|

||||

- [ ] I have made corresponding changes to the documentation (README.md)

|

||||

- [ ] My changes generate no new warnings

|

||||

- [ ] Any dependent changes have been updated in requirements.txt

|

||||

6

.github/dependabot.yml

vendored

Normal file

6

.github/dependabot.yml

vendored

Normal file

@ -0,0 +1,6 @@

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: "github-actions"

|

||||

directory: "/"

|

||||

schedule:

|

||||

interval: "daily"

|

||||

24

.github/stale.yml

vendored

Normal file

24

.github/stale.yml

vendored

Normal file

@ -0,0 +1,24 @@

|

||||

# Number of days of inactivity before an issue becomes stale

|

||||

daysUntilStale: 150

|

||||

|

||||

# Number of days of inactivity before a stale issue is closed

|

||||

daysUntilClose: 7

|

||||

|

||||

# Issues with these labels will never be considered stale

|

||||

exemptLabels:

|

||||

- pinned

|

||||

- security

|

||||

- bug

|

||||

- enhancement

|

||||

|

||||

# Label to use when marking an issue as stale

|

||||

staleLabel: wontfix

|

||||

|

||||

# Comment to post when marking an issue as stale. Set to `false` to disable

|

||||

markComment: >

|

||||

This issue has been automatically marked as stale because it has not had

|

||||

recent activity. It will be closed if no further activity occurs. Thank you

|

||||

for your contributions.

|

||||

|

||||

# Comment to post when closing a stale issue. Set to `false` to disable

|

||||

closeComment: false

|

||||

61

.github/workflows/deploy-docker.yml

vendored

Normal file

61

.github/workflows/deploy-docker.yml

vendored

Normal file

@ -0,0 +1,61 @@

|

||||

name: deploy-docker

|

||||

|

||||

on:

|

||||

push:

|

||||

# branches: [master]

|

||||

tags:

|

||||

- '*'

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

deploy-docker:

|

||||

name: Deploy Docker Hub

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout source

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3.0.0

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3.0.0

|

||||

|

||||

- name: Login to DockerHub

|

||||

uses: docker/login-action@v3.0.0

|

||||

with:

|

||||

username: ${{ secrets.DOCKER_USERNAME }}

|

||||

password: ${{ secrets.DOCKER_TOKEN }}

|

||||

|

||||

- name: Docker meta

|

||||

id: meta

|

||||

uses: docker/metadata-action@v5

|

||||

with:

|

||||

images: rdavidoff/twitch-channel-points-miner-v2

|

||||

tags: |

|

||||

type=semver,pattern={{version}},enable=${{ startsWith(github.ref, 'refs/tags') }}

|

||||

type=raw,value=latest

|

||||

|

||||

- name: Build and push AMD64, ARM64, ARMv7

|

||||

id: docker_build

|

||||

uses: docker/build-push-action@v5.1.0

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: ${{ steps.meta.outputs.tags }}

|

||||

labels: ${{ steps.meta.outputs.labels }}

|

||||

platforms: linux/amd64,linux/arm64,linux/arm/v7

|

||||

build-args: BUILDX_QEMU_ENV=true

|

||||

cache-from: type=gha

|

||||

cache-to: type=gha,mode=max

|

||||

|

||||

# File size exceeds the maximum allowed 25000 bytes

|

||||

# - name: Docker Hub Description

|

||||

# uses: peter-evans/dockerhub-description@v2

|

||||

# with:

|

||||

# username: ${{ secrets.DOCKER_USERNAME }}

|

||||

# password: ${{ secrets.DOCKER_TOKEN }}

|

||||

# repository: rdavidoff/twitch-channel-points-miner-v2

|

||||

|

||||

- name: Image digest AMD64, ARM64, ARMv7

|

||||

run: echo ${{ steps.docker_build.outputs.digest }}

|

||||

90

.github/workflows/github-clone-count-badge.yml

vendored

Normal file

90

.github/workflows/github-clone-count-badge.yml

vendored

Normal file

@ -0,0 +1,90 @@

|

||||

name: GitHub Clone Count Update Everyday

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 */24 * * *"

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: gh login

|

||||

run: echo "${{ secrets.SECRET_TOKEN }}" | gh auth login --with-token

|

||||

|

||||

- name: parse latest clone count

|

||||

run: |

|

||||

curl --user "${{ github.actor }}:${{ secrets.SECRET_TOKEN }}" \

|

||||

-H "Accept: application/vnd.github.v3+json" \

|

||||

https://api.github.com/repos/${{ github.repository }}/traffic/clones \

|

||||

> clone.json

|

||||

|

||||

- name: create gist and download previous count

|

||||

id: set_id

|

||||

run: |

|

||||

if gh secret list | grep -q "GIST_ID"

|

||||

then

|

||||

echo "GIST_ID found"

|

||||

echo ::set-output name=GIST::${{ secrets.GIST_ID }}

|

||||

curl https://gist.githubusercontent.com/${{ github.actor }}/${{ secrets.GIST_ID }}/raw/clone.json > clone_before.json

|

||||

if cat clone_before.json | grep '404: Not Found'; then

|

||||

echo "GIST_ID not valid anymore. Creating another gist..."

|

||||

gist_id=$(gh gist create clone.json | awk -F / '{print $NF}')

|

||||

echo $gist_id | gh secret set GIST_ID

|

||||

echo ::set-output name=GIST::$gist_id

|

||||

cp clone.json clone_before.json

|

||||

git rm --ignore-unmatch CLONE.md

|

||||

fi

|

||||

else

|

||||

echo "GIST_ID not found. Creating a gist..."

|

||||

gist_id=$(gh gist create clone.json | awk -F / '{print $NF}')

|

||||

echo $gist_id | gh secret set GIST_ID

|

||||

echo ::set-output name=GIST::$gist_id

|

||||

cp clone.json clone_before.json

|

||||

fi

|

||||

|

||||

- name: update clone.json

|

||||

run: |

|

||||

curl https://raw.githubusercontent.com/MShawon/github-clone-count-badge/master/main.py > main.py

|

||||

python3 main.py

|

||||

|

||||

- name: Update gist with latest count

|

||||

run: |

|

||||

content=$(sed -e 's/\\/\\\\/g' -e 's/\t/\\t/g' -e 's/\"/\\"/g' -e 's/\r//g' "clone.json" | sed -E ':a;N;$!ba;s/\r{0,1}\n/\\n/g')

|

||||

echo '{"description": "${{ github.repository }} clone statistics", "files": {"clone.json": {"content": "'"$content"'"}}}' > post_clone.json

|

||||

curl -s -X PATCH \

|

||||

--user "${{ github.actor }}:${{ secrets.SECRET_TOKEN }}" \

|

||||

-H "Content-Type: application/json" \

|

||||

-d @post_clone.json https://api.github.com/gists/${{ steps.set_id.outputs.GIST }} > /dev/null 2>&1

|

||||

|

||||

if [ ! -f CLONE.md ]; then

|

||||

shields="https://img.shields.io/badge/dynamic/json?color=success&label=Clone&query=count&url="

|

||||

url="https://gist.githubusercontent.com/${{ github.actor }}/${{ steps.set_id.outputs.GIST }}/raw/clone.json"

|

||||

repo="https://github.com/MShawon/github-clone-count-badge"

|

||||

echo ''> CLONE.md

|

||||

echo '

|

||||

**Markdown**

|

||||

|

||||

```markdown' >> CLONE.md

|

||||

echo "[]($repo)" >> CLONE.md

|

||||

echo '

|

||||

```

|

||||

|

||||

**HTML**

|

||||

```html' >> CLONE.md

|

||||

echo "<a href='$repo'><img alt='GitHub Clones' src='$shields$url&logo=github'></a>" >> CLONE.md

|

||||

echo '```' >> CLONE.md

|

||||

|

||||

git add CLONE.md

|

||||

git config --global user.name "GitHub Action"

|

||||

git config --global user.email "action@github.com"

|

||||

git commit -m "create clone count badge"

|

||||

fi

|

||||

|

||||

- name: Push

|

||||

uses: ad-m/github-push-action@master

|

||||

with:

|

||||

github_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

83

.github/workflows/github-traffic-count-badge.yml

vendored

Normal file

83

.github/workflows/github-traffic-count-badge.yml

vendored

Normal file

@ -0,0 +1,83 @@

|

||||

name: GitHub Traffic Count Update Everyday

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: "0 */24 * * *"

|

||||

workflow_dispatch:

|

||||

|

||||

jobs:

|

||||

build:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

|

||||

- name: gh login

|

||||

run: echo "${{ secrets.SECRET_TOKEN }}" | gh auth login --with-token

|

||||

|

||||

- name: parse latest traffic count

|

||||

run: |

|

||||

curl --user "${{ github.actor }}:${{ secrets.SECRET_TOKEN }}" \

|

||||

-H "Accept: application/vnd.github.v3+json" \

|

||||

https://api.github.com/repos/${{ github.repository }}/traffic/views \

|

||||

> traffic.json

|

||||

- name: create gist and download previous count

|

||||

id: set_id

|

||||

run: |

|

||||

if gh secret list | grep -q "TRAFFIC_ID"

|

||||

then

|

||||

echo "TRAFFIC_ID found"

|

||||

echo ::set-output name=GIST::${{ secrets.TRAFFIC_ID }}

|

||||

curl https://gist.githubusercontent.com/${{ github.actor }}/${{ secrets.TRAFFIC_ID }}/raw/traffic.json > traffic_before.json

|

||||

if cat traffic_before.json | grep '404: Not Found'; then

|

||||

echo "TRAFFIC_ID not valid anymore. Creating another gist..."

|

||||

traffic_id=$(gh gist create traffic.json | awk -F / '{print $NF}')

|

||||

echo $traffic_id | gh secret set TRAFFIC_ID

|

||||

echo ::set-output name=GIST::$traffic_id

|

||||

cp traffic.json traffic_before.json

|

||||

git rm --ignore-unmatch TRAFFIC.md

|

||||

fi

|

||||

else

|

||||

echo "TRAFFIC_ID not found. Creating a gist..."

|

||||

traffic_id=$(gh gist create traffic.json | awk -F / '{print $NF}')

|

||||

echo $traffic_id | gh secret set TRAFFIC_ID

|

||||

echo ::set-output name=GIST::$traffic_id

|

||||

cp traffic.json traffic_before.json

|

||||

fi

|

||||

- name: update traffic.json

|

||||

run: |

|

||||

curl https://gist.githubusercontent.com/MShawon/d37c49ee4ce03f64b92ab58b0cec289f/raw/traffic.py > traffic.py

|

||||

python3 traffic.py

|

||||

- name: Update gist with latest count

|

||||

run: |

|

||||

content=$(sed -e 's/\\/\\\\/g' -e 's/\t/\\t/g' -e 's/\"/\\"/g' -e 's/\r//g' "traffic.json" | sed -E ':a;N;$!ba;s/\r{0,1}\n/\\n/g')

|

||||

echo '{"description": "${{ github.repository }} traffic statistics", "files": {"traffic.json": {"content": "'"$content"'"}}}' > post_traffic.json

|

||||

curl -s -X PATCH \

|

||||

--user "${{ github.actor }}:${{ secrets.SECRET_TOKEN }}" \

|

||||

-H "Content-Type: application/json" \

|

||||

-d @post_traffic.json https://api.github.com/gists/${{ steps.set_id.outputs.GIST }} > /dev/null 2>&1

|

||||

if [ ! -f TRAFFIC.md ]; then

|

||||

shields="https://img.shields.io/badge/dynamic/json?color=success&label=Views&query=count&url="

|

||||

url="https://gist.githubusercontent.com/${{ github.actor }}/${{ steps.set_id.outputs.GIST }}/raw/traffic.json"

|

||||

repo="https://github.com/MShawon/github-clone-count-badge"

|

||||

echo ''> TRAFFIC.md

|

||||

echo '

|

||||

**Markdown**

|

||||

```markdown' >> TRAFFIC.md

|

||||

echo "[]($repo)" >> TRAFFIC.md

|

||||

echo '

|

||||

```

|

||||

**HTML**

|

||||

```html' >> TRAFFIC.md

|

||||

echo "<a href='$repo'><img alt='GitHub Traffic' src='$shields$url&logo=github'></a>" >> TRAFFIC.md

|

||||

echo '```' >> TRAFFIC.md

|

||||

|

||||

git add TRAFFIC.md

|

||||

git config --global user.name "GitHub Action"

|

||||

git config --global user.email "action@github.com"

|

||||

git commit -m "create traffic count badge"

|

||||

fi

|

||||

- name: Push

|

||||

uses: ad-m/github-push-action@master

|

||||

with:

|

||||

github_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

157

.gitignore

vendored

Normal file

157

.gitignore

vendored

Normal file

@ -0,0 +1,157 @@

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

wheels/

|

||||

share/python-wheels/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

MANIFEST

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.nox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*.cover

|

||||

*.py,cover

|

||||

.hypothesis/

|

||||

.pytest_cache/

|

||||

cover/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

db.sqlite3

|

||||

db.sqlite3-journal

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

|

||||

# PyBuilder

|

||||

.pybuilder/

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

ipython_config.py

|

||||

|

||||

# pyenv

|

||||

# For a library or package, you might want to ignore these files since the code is

|

||||

# intended to run in multiple environments; otherwise, check them in:

|

||||

# .python-version

|

||||

|

||||

# pipenv

|

||||

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||

# install all needed dependencies.

|

||||

#Pipfile.lock

|

||||

|

||||

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

||||

__pypackages__/

|

||||

|

||||

# Celery stuff

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# SageMath parsed files

|

||||

*.sage.py

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

.spyproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

# mkdocs documentation

|

||||

/site

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

.dmypy.json

|

||||

dmypy.json

|

||||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

|

||||

# pytype static type analyzer

|

||||

.pytype/

|

||||

|

||||

# Cython debug symbols

|

||||

cython_debug/

|

||||

|

||||

# PyCharm

|

||||

.idea/

|

||||

|

||||

# Custom files

|

||||

run.py

|

||||

chromedriver*

|

||||

|

||||

# Folders

|

||||

cookies/*

|

||||

logs/*

|

||||

screenshots/*

|

||||

htmls/*

|

||||

analytics/*

|

||||

|

||||

# Replit

|

||||

keep_replit_alive.py

|

||||

.replit

|

||||

replit.nix

|

||||

26

.pre-commit-config.yaml

Normal file

26

.pre-commit-config.yaml

Normal file

@ -0,0 +1,26 @@

|

||||

repos:

|

||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||

rev: v4.1.0

|

||||

hooks:

|

||||

- id: trailing-whitespace

|

||||

- id: end-of-file-fixer

|

||||

- id: check-added-large-files

|

||||

- repo: https://github.com/pycqa/isort

|

||||

rev: 5.12.0

|

||||

hooks:

|

||||

- id: isort

|

||||

files: ^TwitchChannelPointsMiner/

|

||||

args: ["--profile", "black"]

|

||||

- repo: https://github.com/psf/black

|

||||

rev: 22.3.0

|

||||

hooks:

|

||||

- id: black

|

||||

files: ^TwitchChannelPointsMiner/

|

||||

- repo: https://github.com/pycqa/flake8

|

||||

rev: 3.9.2

|

||||

hooks:

|

||||

- id: flake8

|

||||

files: ^TwitchChannelPointsMiner/

|

||||

args:

|

||||

- "--max-line-length=88"

|

||||

- "--extend-ignore=E501"

|

||||

16

.vscode/launch.json

vendored

Normal file

16

.vscode/launch.json

vendored

Normal file

@ -0,0 +1,16 @@

|

||||

{

|

||||

// Use IntelliSense to learn about possible attributes.

|

||||

// Hover to view descriptions of existing attributes.

|

||||

// For more information, visit: https://go.microsoft.com/fwlink/?linkid=830387

|

||||

"version": "0.2.0",

|

||||

"configurations": [

|

||||

{

|

||||

"name": "Python: run.py",

|

||||

"type": "python",

|

||||

"request": "launch",

|

||||

"program": "${cwd}/run.py",

|

||||

"console": "integratedTerminal",

|

||||

"justMyCode": true

|

||||

}

|

||||

]

|

||||

}

|

||||

13

CLONE.md

Normal file

13

CLONE.md

Normal file

@ -0,0 +1,13 @@

|

||||

|

||||

|

||||

**Markdown**

|

||||

|

||||

```markdown

|

||||

[](https://github.com/MShawon/github-clone-count-badge)

|

||||

|

||||

```

|

||||

|

||||

**HTML**

|

||||

```html

|

||||

<a href='https://github.com/MShawon/github-clone-count-badge'><img alt='GitHub Clones' src='https://img.shields.io/badge/dynamic/json?color=success&label=Clone&query=count&url=https://gist.githubusercontent.com/rdavydov/fed04b31a250ad522d9ea6547ce87f95/raw/clone.json&logo=github'></a>

|

||||

```

|

||||

76

CODE_OF_CONDUCT.md

Normal file

76

CODE_OF_CONDUCT.md

Normal file

@ -0,0 +1,76 @@

|

||||

# Contributor Covenant Code of Conduct

|

||||

|

||||

## Our Pledge

|

||||

|

||||

In the interest of fostering an open and welcoming environment, we as

|

||||

contributors and maintainers pledge to making participation in our project and

|

||||

our community a harassment-free experience for everyone, regardless of age, body

|

||||

size, disability, ethnicity, sex characteristics, gender identity and expression,

|

||||

level of experience, education, socio-economic status, nationality, personal

|

||||

appearance, race, religion, or sexual identity and orientation.

|

||||

|

||||

## Our Standards

|

||||

|

||||

Examples of behavior that contributes to creating a positive environment

|

||||

include:

|

||||

|

||||

* Using welcoming and inclusive language

|

||||

* Being respectful of differing viewpoints and experiences

|

||||

* Gracefully accepting constructive criticism

|

||||

* Focusing on what is best for the community

|

||||

* Showing empathy towards other community members

|

||||

|

||||

Examples of unacceptable behavior by participants include:

|

||||

|

||||

* The use of sexualized language or imagery and unwelcome sexual attention or

|

||||

advances

|

||||

* Trolling, insulting/derogatory comments, and personal or political attacks

|

||||

* Public or private harassment

|

||||

* Publishing others' private information, such as a physical or electronic

|

||||

address, without explicit permission

|

||||

* Other conduct which could reasonably be considered inappropriate in a

|

||||

professional setting

|

||||

|

||||

## Our Responsibilities

|

||||

|

||||

Project maintainers are responsible for clarifying the standards of acceptable

|

||||

behavior and are expected to take appropriate and fair corrective action in

|

||||

response to any instances of unacceptable behavior.

|

||||

|

||||

Project maintainers have the right and responsibility to remove, edit, or

|

||||

reject comments, commits, code, wiki edits, issues, and other contributions

|

||||

that are not aligned to this Code of Conduct, or to ban temporarily or

|

||||

permanently any contributor for other behaviors that they deem inappropriate,

|

||||

threatening, offensive, or harmful.

|

||||

|

||||

## Scope

|

||||

|

||||

This Code of Conduct applies both within project spaces and in public spaces

|

||||

when an individual is representing the project or its community. Examples of

|

||||

representing a project or community include using an official project e-mail

|

||||

address, posting via an official social media account, or acting as an appointed

|

||||

representative at an online or offline event. Representation of a project may be

|

||||

further defined and clarified by project maintainers.

|

||||

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

||||

reported by contacting the project team at alex.tkd.alex@gmail.com. All

|

||||

complaints will be reviewed and investigated and will result in a response that

|

||||

is deemed necessary and appropriate to the circumstances. The project team is

|

||||

obligated to maintain confidentiality with regard to the reporter of an incident.

|

||||

Further details of specific enforcement policies may be posted separately.

|

||||

|

||||

Project maintainers who do not follow or enforce the Code of Conduct in good

|

||||

faith may face temporary or permanent repercussions as determined by other

|

||||

members of the project's leadership.

|

||||

|

||||

## Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4,

|

||||

available at https://www.contributor-covenant.org/version/1/4/code-of-conduct.html

|

||||

|

||||

[homepage]: https://www.contributor-covenant.org

|

||||

|

||||

For answers to common questions about this code of conduct, see

|

||||

https://www.contributor-covenant.org/faq

|

||||

110

CONTRIBUTING.md

Normal file

110

CONTRIBUTING.md

Normal file

@ -0,0 +1,110 @@

|

||||

# Contributing to this repository

|

||||

|

||||

## Getting started

|

||||

|

||||

Before you begin:

|

||||

- Have you read the [code of conduct](CODE_OF_CONDUCT.md)?

|

||||

- Check out the [existing issues](https://github.com/Tkd-Alex/Twitch-Channel-Points-Miner-v2/issues) & see if there is already an opened issue.

|

||||

|

||||

### Ready to make a change? Fork the repo

|

||||

|

||||

Fork using GitHub Desktop:

|

||||

|

||||

- [Getting started with GitHub Desktop](https://docs.github.com/en/desktop/installing-and-configuring-github-desktop/getting-started-with-github-desktop) will guide you through setting up Desktop.

|

||||

- Once Desktop is set up, you can use it to [fork the repo](https://docs.github.com/en/desktop/contributing-and-collaborating-using-github-desktop/cloning-and-forking-repositories-from-github-desktop)!

|

||||

|

||||

Fork using the command line:

|

||||

|

||||

- [Fork the repo](https://docs.github.com/en/github/getting-started-with-github/fork-a-repo#fork-an-example-repository) so that you can make your changes without affecting the original project until you're ready to merge them.

|

||||

|

||||

Fork with [GitHub Codespaces](https://github.com/features/codespaces):

|

||||

|

||||

- [Fork, edit, and preview](https://docs.github.com/en/free-pro-team@latest/github/developing-online-with-codespaces/creating-a-codespace) using [GitHub Codespaces](https://github.com/features/codespaces) without having to install and run the project locally.

|

||||

|

||||

### Open a pull request

|

||||

When you're done making changes, and you'd like to propose them for review, use the [pull request template](#pull-request-template) to open your PR (pull request).

|

||||

|

||||

### Submit your PR & get it reviewed

|

||||

- Once you submit your PR, other users from the community will review it with you. The first thing you're going to want to do is a [self review](#self-review).

|

||||

- After that, we may have questions. Check back on your PR to keep up with the conversation.

|

||||

- Did you have an issue, like a merge conflict? Check out our [git tutorial](https://lab.github.com/githubtraining/managing-merge-conflicts) on resolving merge conflicts and other issues.

|

||||

|

||||

### Your PR is merged!

|

||||

Congratulations! The whole GitHub community thanks you. :sparkles:

|

||||

|

||||

Once your PR is merged, you will be proudly listed as a contributor in the [contributor chart](https://github.com/Tkd-Alex/Twitch-Channel-Points-Miner-v2/graphs/contributors).

|

||||

|

||||

### Keep contributing as you use GitHub Docs

|

||||

|

||||

Now that you're a part of the GitHub Docs community, you can keep participating in many ways.

|

||||

|

||||

**Learn more about contributing:**

|

||||

|

||||

- [Types of contributions :memo:](#types-of-contributions-memo)

|

||||

- [:beetle: Issues](#beetle-issues)

|

||||

- [:hammer_and_wrench: Pull requests](#hammer_and_wrench-pull-requests)

|

||||

- [Starting with an issue](#starting-with-an-issue)

|

||||

- [Labels](#labels)

|

||||

- [Opening a pull request](#opening-a-pull-request)

|

||||

- [Reviewing](#reviewing)

|

||||

- [Self review](#self-review)

|

||||

- [Pull request template](#pull-request-template)

|

||||

- [Python Styleguide](#python-styleguide)

|

||||

- [Suggested changes](#suggested-changes)

|

||||

|

||||

## Types of contributions :memo:

|

||||

You can contribute to the Twitch-Channel-Points-Miner-v2 in several ways. Bug reporting, pull request, propose new features, fork, donate, and much more :muscle: .

|

||||

|

||||

### :beetle: Issues

|

||||

[Issues](https://docs.github.com/en/github/managing-your-work-on-github/about-issues) are used to report a bug, propose new features, or ask for help. When you open an issue, please use the appropriate template and label.

|

||||

|

||||

### :hammer_and_wrench: Pull requests

|

||||

A [pull request](https://docs.github.com/en/github/collaborating-with-issues-and-pull-requests/about-pull-requests) is a way to suggest changes in our repository.

|

||||

|

||||

When we merge those changes, they should be deployed to the live site within 24 hours. :earth_africa: To learn more about opening a pull request in this repo, see [Opening a pull request](#opening-a-pull-request) below.

|

||||

|

||||

## Starting with an issue

|

||||

You can browse existing issues to find something that needs help!

|

||||

|

||||

### Labels

|

||||

Labels can help you find an issue you'd like to help with.

|

||||

- The `bug` label is used when something isn't working

|

||||

- The `documentation` label is used when you suggest improvements or additions to documentation (README.md update)

|

||||

- The `duplicate` label is used when this issue or pull request already exists

|

||||

- The `enhancement` label is used when you ask for / or propose a new feature or request

|

||||

- The `help wanted` is used when you need help with something

|

||||

- The `improvements` label is used when you would suggest improvements on already existing features

|

||||

- The `invalid` label is used for a non-valid issue

|

||||

- The `question` label is used for further information is requested

|

||||

- The `wontfix` label is used if we will not work on it

|

||||

|

||||

## Opening a pull request

|

||||

You can use the GitHub user interface :pencil2: for minor changes, like fixing a typo or updating a readme. You can also fork the repo and then clone it locally to view changes and run your tests on your machine.

|

||||

|

||||

### Self review

|

||||

You should always review your own PR first.

|

||||

|

||||

For content changes, make sure that you:

|

||||

- [ ] Confirm that the changes address every part of the content design plan from your issue (if there are differences, explain them).

|

||||

- [ ] Review the content for technical accuracy.

|

||||

- [ ] Review the entire pull request using the checklist present in the template.

|

||||

- [ ] Copy-edit the changes for grammar, spelling, and adherence to the style guide.

|

||||

- [ ] Check new or updated Liquid statements to confirm that versioning is correct.

|

||||

- [ ] Check that all of your changes render correctly in staging. Remember, that lists and tables can be tricky.

|

||||

- [ ] If there are any failing checks in your PR, troubleshoot them until they're all passing.

|

||||

|

||||

### Pull request template

|

||||

When you open a pull request, you must fill out the "Ready for review" template before we can review your PR. This template helps reviewers understand your changes and the purpose of your pull request.

|

||||

|

||||

### Python Styleguide

|

||||

All Python code is formatted with [Black](https://github.com/psf/black) using the default settings. Your code will not be accepted if it is not blackened.

|

||||

You can use the pre-commit hook.

|

||||

```

|

||||

pip install pre-commit

|

||||

pre-commit install

|

||||

```

|

||||

|

||||

### Suggested changes

|

||||

We may ask for changes to be made before a PR can be merged, either using [suggested changes](https://docs.github.com/en/github/collaborating-with-issues-and-pull-requests/incorporating-feedback-in-your-pull-request) or pull request comments. You can apply suggested changes directly through the UI. You can make any other changes in your fork, then commit them to your branch.

|

||||

|

||||

As you update your PR and apply changes, mark each conversation as [resolved](https://docs.github.com/en/github/collaborating-with-issues-and-pull-requests/commenting-on-a-pull-request#resolving-conversations).

|

||||

5

DELETE_PYCACHE.bat

Normal file

5

DELETE_PYCACHE.bat

Normal file

@ -0,0 +1,5 @@

|

||||

@echo off

|

||||

rmdir /s /q __pycache__

|

||||

rmdir /s /q TwitchChannelPointsMiner\__pycache__

|

||||

rmdir /s /q TwitchChannelPointsMiner\classes\__pycache__

|

||||

rmdir /s /q TwitchChannelPointsMiner\classes\entities\__pycache__

|

||||

43

Dockerfile

Normal file

43

Dockerfile

Normal file

@ -0,0 +1,43 @@

|

||||

FROM python:3.12

|

||||

|

||||

ARG BUILDX_QEMU_ENV

|

||||

|

||||

WORKDIR /usr/src/app

|

||||

|

||||

COPY ./requirements.txt ./

|

||||

|

||||

ENV CRYPTOGRAPHY_DONT_BUILD_RUST=1

|

||||

|

||||

RUN pip install --upgrade pip

|

||||

|

||||

RUN apt-get update

|

||||

RUN DEBIAN_FRONTEND=noninteractive apt-get install -qq -y --fix-missing --no-install-recommends \

|

||||

gcc \

|

||||

libffi-dev \

|

||||

rustc \

|

||||

zlib1g-dev \

|

||||

libjpeg-dev \

|

||||

libssl-dev \

|

||||

libblas-dev \

|

||||

liblapack-dev \

|

||||

make \

|

||||

cmake \

|

||||

automake \

|

||||

ninja-build \

|

||||

g++ \

|

||||

subversion \

|

||||

python3-dev \

|

||||

&& if [ "${BUILDX_QEMU_ENV}" = "true" ] && [ "$(getconf LONG_BIT)" = "32" ]; then \

|

||||

pip install -U cryptography==3.3.2; \

|

||||

fi \

|

||||

&& pip install -r requirements.txt \

|

||||

&& pip cache purge \

|

||||

&& apt-get remove -y gcc rustc \

|

||||

&& apt-get autoremove -y \

|

||||

&& apt-get autoclean -y \

|

||||

&& apt-get clean -y \

|

||||

&& rm -rf /var/lib/apt/lists/* \

|

||||

&& rm -rf /usr/share/doc/*

|

||||

|

||||

ADD ./TwitchChannelPointsMiner ./TwitchChannelPointsMiner

|

||||

ENTRYPOINT [ "python", "run.py" ]

|

||||

743

README.md

Normal file

743

README.md

Normal file

@ -0,0 +1,743 @@

|

||||

|

||||

<p align="center">

|

||||

<a href="https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2/releases"><img alt="Latest Version" src="https://img.shields.io/github/v/release/rdavydov/Twitch-Channel-Points-Miner-v2?style=flat&color=white&logo=github&logoColor=white"></a>

|

||||

<a href="https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2/stargazers"><img alt="GitHub Repo stars" src="https://img.shields.io/github/stars/rdavydov/Twitch-Channel-Points-Miner-v2?style=flat&color=limegreen&logo=github&logoColor=white"></a>

|

||||

<a href='https://github.com/MShawon/github-clone-count-badge'><img alt='GitHub Traffic' src='https://img.shields.io/badge/dynamic/json?style=flat&color=blue&label=views&query=count&url=https://gist.githubusercontent.com/rdavydov/ad9a3c6a8d9c322f9a6b62781ea94a93/raw/traffic.json&logo=github&logoColor=white'></a>

|

||||

<a href='https://github.com/MShawon/github-clone-count-badge'><img alt='GitHub Clones' src='https://img.shields.io/badge/dynamic/json?style=flat&color=purple&label=clones&query=count&url=https://gist.githubusercontent.com/rdavydov/fed04b31a250ad522d9ea6547ce87f95/raw/clone.json&logo=github&logoColor=white'></a>

|

||||

<a href="https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2/blob/master/LICENSE"><img alt="License" src="https://img.shields.io/github/license/rdavydov/Twitch-Channel-Points-Miner-v2?style=flat&color=black&logo=unlicense&logoColor=white"></a>

|

||||

<a href="https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2"><img alt="GitHub last commit" src="https://img.shields.io/github/last-commit/rdavydov/Twitch-Channel-Points-Miner-v2?style=flat&color=lightyellow&logo=github&logoColor=white"></a>

|

||||

</p>

|

||||

|

||||

<p align="center">

|

||||

<a href="https://hub.docker.com/r/rdavidoff/twitch-channel-points-miner-v2"><img alt="Docker Version" src="https://img.shields.io/docker/v/rdavidoff/twitch-channel-points-miner-v2?style=flat&color=white&logo=docker&logoColor=white&label=release"></a>

|

||||

<a href="https://hub.docker.com/r/rdavidoff/twitch-channel-points-miner-v2"><img alt="Docker Stars" src="https://img.shields.io/docker/stars/rdavidoff/twitch-channel-points-miner-v2?style=flat&color=limegreen&logo=docker&logoColor=white&label=stars"></a>

|

||||

<a href="https://hub.docker.com/r/rdavidoff/twitch-channel-points-miner-v2"><img alt="Docker Pulls" src="https://img.shields.io/docker/pulls/rdavidoff/twitch-channel-points-miner-v2?style=flat&color=blue&logo=docker&logoColor=white&label=pulls"></a>

|

||||

<a href="https://hub.docker.com/r/rdavidoff/twitch-channel-points-miner-v2"><img alt="Docker Images Size AMD64" src="https://img.shields.io/docker/image-size/rdavidoff/twitch-channel-points-miner-v2/latest?arch=amd64&label=AMD64 image size&style=flat&color=purple&logo=amd&logoColor=white"></a>

|

||||

<a href="https://hub.docker.com/r/rdavidoff/twitch-channel-points-miner-v2"><img alt="Docker Images Size ARM64" src="https://img.shields.io/docker/image-size/rdavidoff/twitch-channel-points-miner-v2/latest?arch=arm64&label=ARM64 image size&style=flat&color=black&logo=arm&logoColor=white"></a>

|

||||

<a href="https://hub.docker.com/r/rdavidoff/twitch-channel-points-miner-v2"><img alt="Docker Images Size ARMv7" src="https://img.shields.io/docker/image-size/rdavidoff/twitch-channel-points-miner-v2/latest?arch=arm&label=ARMv7 image size&style=flat&color=lightyellow&logo=arm&logoColor=white"></a>

|

||||

</p>

|

||||

|

||||

|

||||

<h1 align="center">https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2</h1>

|

||||

|

||||

**Credits**

|

||||

- Main idea: https://github.com/gottagofaster236/Twitch-Channel-Points-Miner

|

||||

- ~~Bet system (Selenium): https://github.com/ClementRoyer/TwitchAutoCollect-AutoBet~~

|

||||

- Based on: https://github.com/Tkd-Alex/Twitch-Channel-Points-Miner-v2

|

||||

|

||||

> A simple script that will watch a stream for you and earn the channel points.

|

||||

|

||||

> It can wait for a streamer to go live (+_450 points_ when the stream starts), it will automatically click the bonus button (_+50 points_), and it will follow raids (_+250 points_).

|

||||

|

||||

Read more about the channel points [here](https://help.twitch.tv/s/article/channel-points-guide).

|

||||

|

||||

# README Contents

|

||||

1. 🤝 [Community](#community)

|

||||

2. 🚀 [Main differences from the original repository](#main-differences-from-the-original-repository)

|

||||

3. 🧾 [Logs feature](#logs-feature)

|

||||

- [Full logs](#full-logs)

|

||||

- [Less logs](#less-logs)

|

||||

- [Final report](#final-report)

|

||||

4. 🧐 [How to use](#how-to-use)

|

||||

- [Cloning](#by-cloning-the-repository)

|

||||

- [Docker](#docker)

|

||||

- [Docker Hub](#docker-hub)

|

||||

- [Portainer](#portainer)

|

||||

- [Replit](#replit)

|

||||

- [Limits](#limits)

|

||||

5. 🔧 [Settings](#settings)

|

||||

- [LoggerSettings](#loggersettings)

|

||||

- [StreamerSettings](#streamersettings)

|

||||

- [BetSettings](#betsettings)

|

||||

- [Bet strategy](#bet-strategy)

|

||||

- [FilterCondition](#filtercondition)

|

||||

- [Example](#example)

|

||||

6. 📈 [Analytics](#analytics)

|

||||

7. 🍪 [Migrating from an old repository (the original one)](#migrating-from-an-old-repository-the-original-one)

|

||||

8. 🪟 [Windows](#windows)

|

||||

9. 📱 [Termux](#termux)

|

||||

10. ⚠️ [Disclaimer](#disclaimer)

|

||||

|

||||

|

||||

## Community

|

||||

If you want to help with this project, please leave a star 🌟 and share it with your friends! 😎

|

||||

|

||||

If you want to offer me a coffee, I would be grateful! ❤️

|

||||

|

||||

| | |

|

||||

|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------|

|

||||

|<a href="https://dogechain.info" target="_blank"><img src="https://dynamic-assets.coinbase.com/3803f30367bb3972e192cd3fdd2230cd37e6d468eab12575a859229b20f12ff9c994d2c86ccd7bf9bc258e9bd5e46c5254283182f70caf4bd02cc4f8e3890d82/asset_icons/1597d628dd19b7885433a2ac2d7de6ad196c519aeab4bfe679706aacbf1df78a.png" alt="Donate DOGE" height="16" width="16"></a>|`DAKzncwKkpfPCm1xVU7u2pConpXwX7HS3D` _(<a href="https://dogechain.info" target="_blank">DOGE</a>)_|

|

||||

|

||||

If you have any issues or you want to contribute, you are welcome! But please read the [CONTRIBUTING.md](https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2/blob/master/CONTRIBUTING.md) file.

|

||||

|

||||

## Main differences from the original repository:

|

||||

|

||||

- Improved logging: emojis, colors, files and much more ✔️

|

||||

- Final report with all the data ✔️

|

||||

- Rewritten codebase now uses classes instead of modules with global variables ✔️

|

||||

- Automatic downloading of the list of followers and using it as an input ✔️

|

||||

- Better 'Watch Streak' strategy in the priority system [#11](https://github.com/Tkd-Alex/Twitch-Channel-Points-Miner-v2/issues/11) ✔️

|

||||

- Auto claiming [game drops](https://help.twitch.tv/s/article/mission-based-drops) from the Twitch inventory [#21](https://github.com/Tkd-Alex/Twitch-Channel-Points-Miner-v2/issues/21) ✔️

|

||||

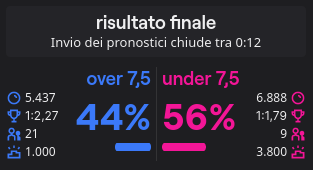

- Placing a bet / making a prediction with your channel points [#41](https://github.com/Tkd-Alex/Twitch-Channel-Points-Miner-v2/issues/41) ([@lay295](https://github.com/lay295)) ✔️

|

||||

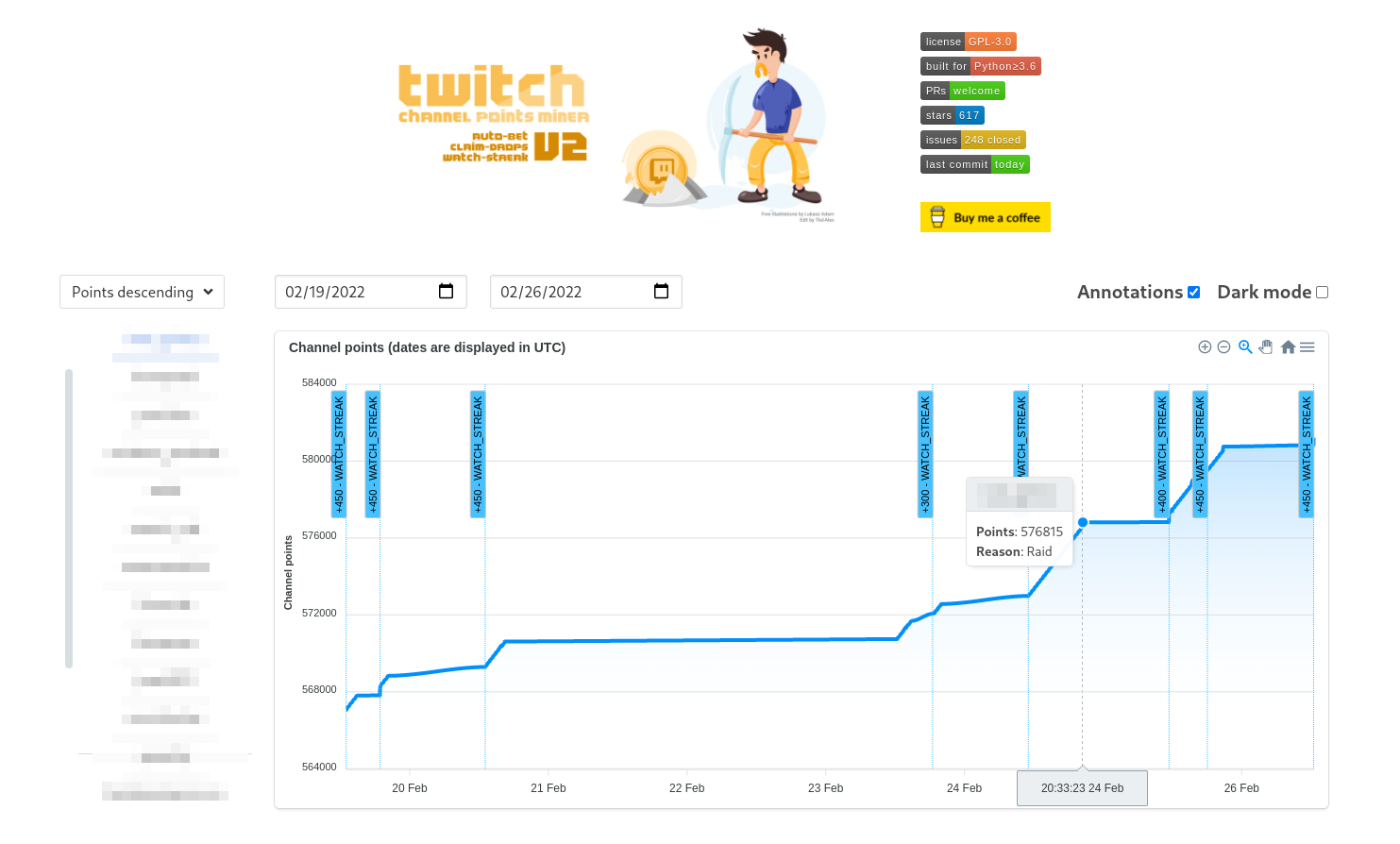

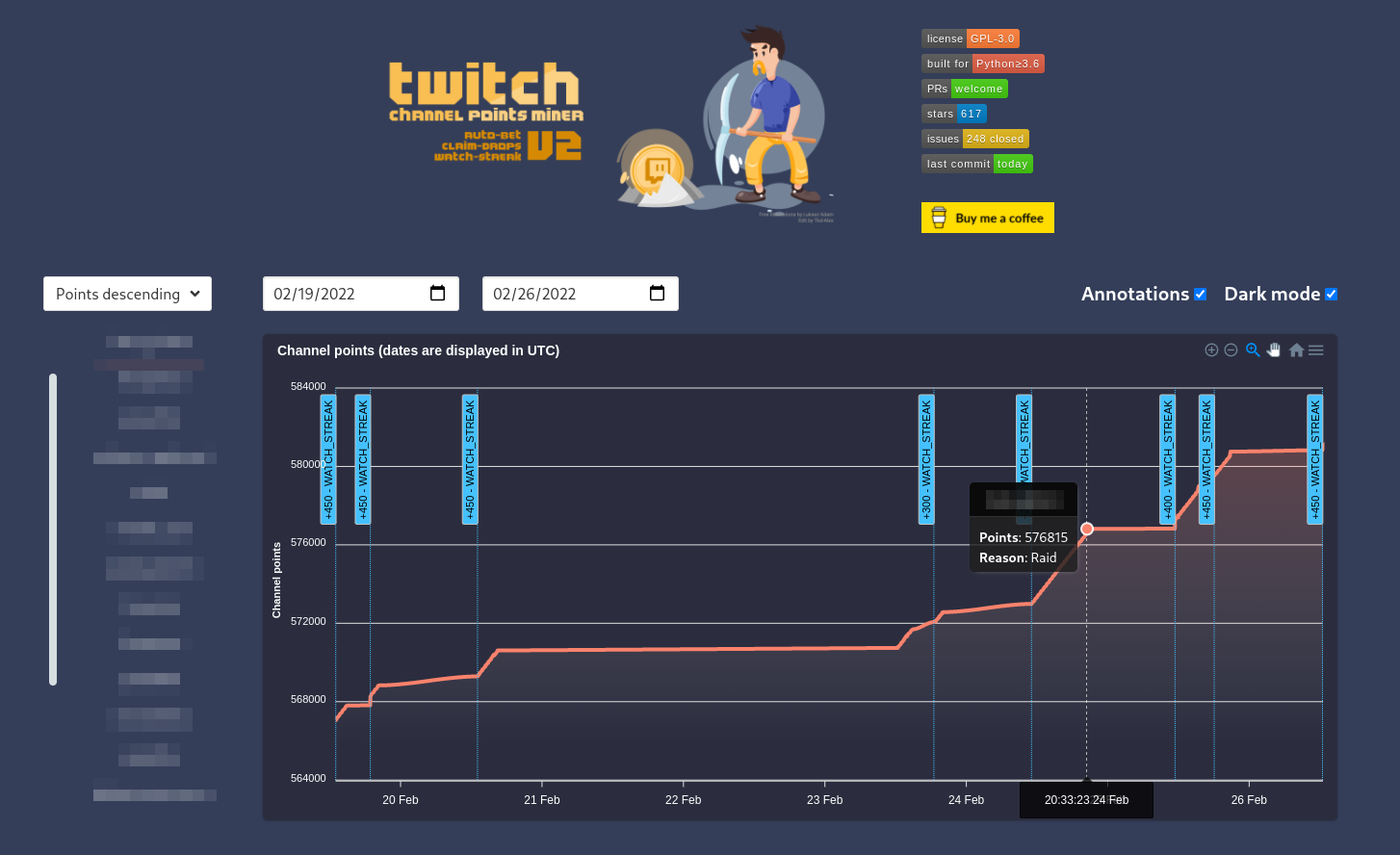

- Switchable analytics chart that shows the progress of your points with various annotations [#96](https://github.com/Tkd-Alex/Twitch-Channel-Points-Miner-v2/issues/96) ✔️

|

||||

- Joining the IRC Chat to increase the watch time and get StreamElements points [#47](https://github.com/Tkd-Alex/Twitch-Channel-Points-Miner-v2/issues/47) ✔️

|

||||

- [Moments](https://help.twitch.tv/s/article/moments) claiming [#182](https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2/issues/182) ✔️

|

||||

- Notifying on `@nickname` mention in the Twitch chat [#227](https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2/issues/227) ✔️

|

||||

|

||||

## Logs feature

|

||||

### Full logs

|

||||

```

|

||||

%d/%m/%y %H:%M:%S - INFO - [run]: 💣 Start session: '9eb934b0-1684-4a62-b3e2-ba097bd67d35'

|

||||

%d/%m/%y %H:%M:%S - INFO - [run]: 🤓 Loading data for x streamers. Please wait ...

|

||||

%d/%m/%y %H:%M:%S - INFO - [set_offline]: 😴 Streamer(username=streamer-username1, channel_id=0000000, channel_points=67247) is Offline!

|

||||

%d/%m/%y %H:%M:%S - INFO - [set_offline]: 😴 Streamer(username=streamer-username2, channel_id=0000000, channel_points=4240) is Offline!

|

||||

%d/%m/%y %H:%M:%S - INFO - [set_offline]: 😴 Streamer(username=streamer-username3, channel_id=0000000, channel_points=61365) is Offline!

|

||||

%d/%m/%y %H:%M:%S - INFO - [set_offline]: 😴 Streamer(username=streamer-username4, channel_id=0000000, channel_points=3760) is Offline!

|

||||

%d/%m/%y %H:%M:%S - INFO - [set_online]: 🥳 Streamer(username=streamer-username, channel_id=0000000, channel_points=61365) is Online!

|

||||

%d/%m/%y %H:%M:%S - INFO - [start_bet]: 🔧 Start betting for EventPrediction(event_id=xxxx-xxxx-xxxx-xxxx, title=Please star this repo) owned by Streamer(username=streamer-username, channel_id=0000000, channel_points=61365)

|

||||

%d/%m/%y %H:%M:%S - INFO - [__open_coins_menu]: 🔧 Open coins menu for EventPrediction(event_id=xxxx-xxxx-xxxx-xxxx, title=Please star this repo)

|

||||

%d/%m/%y %H:%M:%S - INFO - [__click_on_bet]: 🔧 Click on the bet for EventPrediction(event_id=xxxx-xxxx-xxxx-xxxx, title=Please star this repo)

|

||||

%d/%m/%y %H:%M:%S - INFO - [__enable_custom_bet_value]: 🔧 Enable input of custom value for EventPrediction(event_id=xxxx-xxxx-xxxx-xxxx, title=Please star this repo)

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: ⏰ Place the bet after: 89.99s for: EventPrediction(event_id=xxxx-xxxx-xxxx-xxxx-15c61914ef69, title=Please star this repo)

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +12 → Streamer(username=streamer-username, channel_id=0000000, channel_points=61377) - Reason: WATCH.

|

||||

%d/%m/%y %H:%M:%S - INFO - [make_predictions]: 🍀 Going to complete bet for EventPrediction(event_id=xxxx-xxxx-xxxx-xxxx-15c61914ef69, title=Please star this repo) owned by Streamer(username=streamer-username, channel_id=0000000, channel_points=61377)

|

||||

%d/%m/%y %H:%M:%S - INFO - [make_predictions]: 🍀 Place 5k channel points on: SI (BLUE), Points: 848k, Users: 190 (70.63%), Odds: 1.24 (80.65%)

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +6675 → Streamer(username=streamer-username, channel_id=0000000, channel_points=64206) - Reason: PREDICTION.

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 📊 EventPrediction(event_id=xxxx-xxxx-xxxx-xxxx, title=Please star this repo) - Result: WIN, Points won: 6675

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +12 → Streamer(username=streamer-username, channel_id=0000000, channel_points=64218) - Reason: WATCH.

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +12 → Streamer(username=streamer-username, channel_id=0000000, channel_points=64230) - Reason: WATCH.

|

||||

%d/%m/%y %H:%M:%S - INFO - [claim_bonus]: 🎁 Claiming the bonus for Streamer(username=streamer-username, channel_id=0000000, channel_points=64230)!

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +60 → Streamer(username=streamer-username, channel_id=0000000, channel_points=64290) - Reason: CLAIM.

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +12 → Streamer(username=streamer-username, channel_id=0000000, channel_points=64326) - Reason: WATCH.

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +400 → Streamer(username=streamer-username, channel_id=0000000, channel_points=64326) - Reason: WATCH_STREAK.

|

||||

%d/%m/%y %H:%M:%S - INFO - [claim_bonus]: 🎁 Claiming the bonus for Streamer(username=streamer-username, channel_id=0000000, channel_points=64326)!

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +60 → Streamer(username=streamer-username, channel_id=0000000, channel_points=64386) - Reason: CLAIM.

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +12 → Streamer(username=streamer-username, channel_id=0000000, channel_points=64398) - Reason: WATCH.

|

||||

%d/%m/%y %H:%M:%S - INFO - [update_raid]: 🎭 Joining raid from Streamer(username=streamer-username, channel_id=0000000, channel_points=64398) to another-username!

|

||||

%d/%m/%y %H:%M:%S - INFO - [on_message]: 🚀 +250 → Streamer(username=streamer-username, channel_id=0000000, channel_points=6845) - Reason: RAID.

|

||||

```

|

||||

### Less logs

|

||||

```

|

||||

%d/%m %H:%M:%S - 💣 Start session: '9eb934b0-1684-4a62-b3e2-ba097bd67d35'

|

||||

%d/%m %H:%M:%S - 🤓 Loading data for 13 streamers. Please wait ...

|

||||

%d/%m %H:%M:%S - 😴 streamer-username1 (xxx points) is Offline!

|

||||

%d/%m %H:%M:%S - 😴 streamer-username2 (xxx points) is Offline!

|

||||

%d/%m %H:%M:%S - 😴 streamer-username3 (xxx points) is Offline!

|

||||

%d/%m %H:%M:%S - 😴 streamer-username4 (xxx points) is Offline!

|

||||

%d/%m %H:%M:%S - 🥳 streamer-username (xxx points) is Online!

|

||||

%d/%m %H:%M:%S - 🔧 Start betting for EventPrediction: Please star this repo owned by streamer-username (xxx points)

|

||||

%d/%m %H:%M:%S - 🔧 Open coins menu for EventPrediction: Please star this repo

|

||||

%d/%m %H:%M:%S - 🔧 Click on the bet for EventPrediction: Please star this repo

|

||||

%d/%m %H:%M:%S - 🔧 Enable input of custom value for EventPrediction: Please star this repo

|

||||

%d/%m %H:%M:%S - ⏰ Place the bet after: 89.99s EventPrediction: Please star this repo

|

||||

%d/%m %H:%M:%S - 🚀 +12 → streamer-username (xxx points) - Reason: WATCH.

|

||||

%d/%m %H:%M:%S - 🍀 Going to complete bet for EventPrediction: Please star this repo owned by streamer-username (xxx points)

|

||||

%d/%m %H:%M:%S - 🍀 Place 5k channel points on: SI (BLUE), Points: 848k, Users: 190 (70.63%), Odds: 1.24 (80.65%)

|

||||

%d/%m %H:%M:%S - 🚀 +6675 → streamer-username (xxx points) - Reason: PREDICTION.

|

||||

%d/%m %H:%M:%S - 📊 EventPrediction: Please star this repo - Result: WIN, Points won: 6675

|

||||

%d/%m %H:%M:%S - 🚀 +12 → streamer-username (xxx points) - Reason: WATCH.

|

||||

%d/%m %H:%M:%S - 🚀 +12 → streamer-username (xxx points) - Reason: WATCH.

|

||||

%d/%m %H:%M:%S - 🚀 +60 → streamer-username (xxx points) - Reason: CLAIM.

|

||||

%d/%m %H:%M:%S - 🚀 +12 → streamer-username (xxx points) - Reason: WATCH.

|

||||

%d/%m %H:%M:%S - 🚀 +400 → streamer-username (xxx points) - Reason: WATCH_STREAK.

|

||||

%d/%m %H:%M:%S - 🚀 +60 → streamer-username (xxx points) - Reason: CLAIM.

|

||||

%d/%m %H:%M:%S - 🚀 +12 → streamer-username (xxx points) - Reason: WATCH.

|

||||

%d/%m %H:%M:%S - 🎭 Joining raid from streamer-username (xxx points) to another-username!

|

||||

%d/%m %H:%M:%S - 🚀 +250 → streamer-username (xxx points) - Reason: RAID.

|

||||

```

|

||||

### Final report:

|

||||

```

|

||||

%d/%m/%y %H:%M:%S - 🛑 End session 'f738d438-cdbc-4cd5-90c4-1517576f1299'

|

||||

%d/%m/%y %H:%M:%S - 📄 Logs file: /.../path/Twitch-Channel-Points-Miner-v2/logs/username.timestamp.log

|

||||

%d/%m/%y %H:%M:%S - ⌛ Duration 10:29:19.547371

|

||||

|

||||

%d/%m/%y %H:%M:%S - 📊 BetSettings(Strategy=Strategy.SMART, Percentage=7, PercentageGap=20, MaxPoints=7500

|

||||

%d/%m/%y %H:%M:%S - 📊 EventPrediction(event_id=xxxx-xxxx-xxxx-xxxx, title="Event Title1")

|

||||

Streamer(username=streamer-username, channel_id=0000000, channel_points=67247)

|

||||

Bet(TotalUsers=1k, TotalPoints=11M), Decision={'choice': 'B', 'amount': 5289, 'id': 'xxxx-yyyy-zzzz'})

|

||||

Outcome0(YES (BLUE) Points: 7M, Users: 641 (58.49%), Odds: 1.6, (5}%)

|

||||

Outcome1(NO (PINK),Points: 4M, Users: 455 (41.51%), Odds: 2.65 (37.74%))

|

||||

Result: {'type': 'LOSE', 'won': 0}

|

||||

%d/%m/%y %H:%M:%S - 📊 EventPrediction(event_id=yyyy-yyyy-yyyy-yyyy, title="Event Title2")

|

||||

Streamer(username=streamer-username, channel_id=0000000, channel_points=3453464)

|

||||

Bet(TotalUsers=921, TotalPoints=11M), Decision={'choice': 'A', 'amount': 4926, 'id': 'xxxx-yyyy-zzzz'})

|

||||

Outcome0(YES (BLUE) Points: 9M, Users: 562 (61.02%), Odds: 1.31 (76.34%))

|

||||

Outcome1(YES (PINK) Points: 3M, Users: 359 (38.98%), Odds: 4.21 (23.75%))

|

||||

Result: {'type': 'WIN', 'won': 6531}

|

||||

%d/%m/%y %H:%M:%S - 📊 EventPrediction(event_id=ad152117-251b-4666-b683-18e5390e56c3, title="Event Title3")

|

||||

Streamer(username=streamer-username, channel_id=0000000, channel_points=45645645)

|

||||

Bet(TotalUsers=260, TotalPoints=3M), Decision={'choice': 'A', 'amount': 5054, 'id': 'xxxx-yyyy-zzzz'})

|

||||

Outcome0(YES (BLUE) Points: 689k, Users: 114 (43.85%), Odds: 4.24 (23.58%))

|

||||

Outcome1(NO (PINK) Points: 2M, Users: 146 (56.15%), Odds: 1.31 (76.34%))

|

||||

Result: {'type': 'LOSE', 'won': 0}

|

||||

|

||||

%d/%m/%y %H:%M:%S - 🤖 Streamer(username=streamer-username, channel_id=0000000, channel_points=67247), Total points gained (after farming - before farming): -7838

|

||||

%d/%m/%y %H:%M:%S - 💰 CLAIM(11 times, 550 gained), PREDICTION(1 times, 6531 gained), WATCH(35 times, 350 gained)

|

||||

%d/%m/%y %H:%M:%S - 🤖 Streamer(username=streamer-username2, channel_id=0000000, channel_points=61365), Total points gained (after farming - before farming): 977

|

||||

%d/%m/%y %H:%M:%S - 💰 CLAIM(4 times, 240 gained), REFUND(1 times, 605 gained), WATCH(11 times, 132 gained)

|

||||

%d/%m/%y %H:%M:%S - 🤖 Streamer(username=streamer-username5, channel_id=0000000, channel_points=25960), Total points gained (after farming - before farming): 1680

|

||||

%d/%m/%y %H:%M:%S - 💰 CLAIM(17 times, 850 gained), WATCH(53 times, 530 gained)

|

||||

%d/%m/%y %H:%M:%S - 🤖 Streamer(username=streamer-username6, channel_id=0000000, channel_points=9430), Total points gained (after farming - before farming): 1120

|

||||

%d/%m/%y %H:%M:%S - 💰 CLAIM(14 times, 700 gained), WATCH(42 times, 420 gained), WATCH_STREAK(1 times, 450 gained)

|

||||

```

|

||||

|

||||

## How to use:

|

||||

First of all please create a run.py file. You can just copy [example.py](https://github.com/rdavydov/Twitch-Channel-Points-Miner-v2/blob/master/example.py) and modify it according to your needs.

|

||||

```python

|

||||

# -*- coding: utf-8 -*-

|

||||

|

||||

import logging

|

||||

from colorama import Fore

|

||||

from TwitchChannelPointsMiner import TwitchChannelPointsMiner

|

||||

from TwitchChannelPointsMiner.logger import LoggerSettings, ColorPalette

|

||||

from TwitchChannelPointsMiner.classes.Chat import ChatPresence

|

||||

from TwitchChannelPointsMiner.classes.Discord import Discord

|

||||

from TwitchChannelPointsMiner.classes.Webhook import Webhook

|

||||

from TwitchChannelPointsMiner.classes.Telegram import Telegram

|

||||

from TwitchChannelPointsMiner.classes.Settings import Priority, Events, FollowersOrder

|

||||

from TwitchChannelPointsMiner.classes.entities.Bet import Strategy, BetSettings, Condition, OutcomeKeys, FilterCondition, DelayMode

|

||||

from TwitchChannelPointsMiner.classes.entities.Streamer import Streamer, StreamerSettings

|

||||

|

||||

twitch_miner = TwitchChannelPointsMiner(

|

||||

username="your-twitch-username",

|

||||

password="write-your-secure-psw", # If no password will be provided, the script will ask interactively

|

||||

claim_drops_startup=False, # If you want to auto claim all drops from Twitch inventory on the startup

|

||||

priority=[ # Custom priority in this case for example:

|

||||

Priority.STREAK, # - We want first of all to catch all watch streak from all streamers

|

||||

Priority.DROPS, # - When we don't have anymore watch streak to catch, wait until all drops are collected over the streamers

|

||||

Priority.ORDER # - When we have all of the drops claimed and no watch-streak available, use the order priority (POINTS_ASCENDING, POINTS_DESCEDING)

|

||||

],

|

||||

enable_analytics=False, # Disables Analytics if False. Disabling it significantly reduces memory consumption

|

||||

disable_ssl_cert_verification=False, # Set to True at your own risk and only to fix SSL: CERTIFICATE_VERIFY_FAILED error

|

||||

disable_at_in_nickname=False, # Set to True if you want to check for your nickname mentions in the chat even without @ sign

|

||||

logger_settings=LoggerSettings(

|

||||

save=True, # If you want to save logs in a file (suggested)

|

||||

console_level=logging.INFO, # Level of logs - use logging.DEBUG for more info

|

||||

console_username=False, # Adds a username to every console log line if True. Also adds it to Telegram, Discord, etc. Useful when you have several accounts

|

||||

auto_clear=True, # Create a file rotation handler with interval = 1D and backupCount = 7 if True (default)

|

||||

time_zone="", # Set a specific time zone for console and file loggers. Use tz database names. Example: "America/Denver"

|

||||

file_level=logging.DEBUG, # Level of logs - If you think the log file it's too big, use logging.INFO

|

||||

emoji=True, # On Windows, we have a problem printing emoji. Set to false if you have a problem

|

||||

less=False, # If you think that the logs are too verbose, set this to True

|

||||

colored=True, # If you want to print colored text

|

||||

color_palette=ColorPalette( # You can also create a custom palette color (for the common message).

|

||||

STREAMER_online="GREEN", # Don't worry about lower/upper case. The script will parse all the values.

|

||||

streamer_offline="red", # Read more in README.md

|

||||

BET_wiN=Fore.MAGENTA # Color allowed are: [BLACK, RED, GREEN, YELLOW, BLUE, MAGENTA, CYAN, WHITE, RESET].

|

||||

),

|

||||

telegram=Telegram( # You can omit or set to None if you don't want to receive updates on Telegram

|

||||

chat_id=123456789, # Chat ID to send messages @getmyid_bot

|

||||

token="123456789:shfuihreuifheuifhiu34578347", # Telegram API token @BotFather

|

||||

events=[Events.STREAMER_ONLINE, Events.STREAMER_OFFLINE,

|

||||

Events.BET_LOSE, Events.CHAT_MENTION], # Only these events will be sent to the chat

|

||||

disable_notification=True, # Revoke the notification (sound/vibration)

|

||||

),

|

||||

discord=Discord(

|

||||

webhook_api="https://discord.com/api/webhooks/0123456789/0a1B2c3D4e5F6g7H8i9J", # Discord Webhook URL

|

||||

events=[Events.STREAMER_ONLINE, Events.STREAMER_OFFLINE,

|

||||

Events.BET_LOSE, Events.CHAT_MENTION], # Only these events will be sent to the chat

|

||||

),

|

||||

webhook=Webhook(

|

||||

endpoint="https://example.com/webhook", # Webhook URL

|

||||

method="GET", # GET or POST

|

||||

events=[Events.STREAMER_ONLINE, Events.STREAMER_OFFLINE,

|

||||

Events.BET_LOSE, Events.CHAT_MENTION], # Only these events will be sent to the endpoint

|

||||

),

|

||||

matrix=Matrix(

|

||||

username="twitch_miner", # Matrix username (without homeserver)

|

||||

password="...", # Matrix password

|

||||

homeserver="matrix.org", # Matrix homeserver

|

||||

room_id="...", # Room ID

|

||||

events=[Events.STREAMER_ONLINE, Events.STREAMER_OFFLINE, Events.BET_LOSE], # Only these events will be sent

|

||||

),

|

||||

pushover=Pushover(

|

||||

userkey="YOUR-ACCOUNT-TOKEN", # Login to https://pushover.net/, the user token is on the main page

|

||||

token="YOUR-APPLICATION-TOKEN", # Create a application on the website, and use the token shown in your application

|

||||

priority=0, # Read more about priority here: https://pushover.net/api#priority

|

||||

sound="pushover", # A list of sounds can be found here: https://pushover.net/api#sounds

|

||||

events=[Events.CHAT_MENTION, Events.DROP_CLAIM], # Only these events will be sent

|

||||

)

|

||||

),

|

||||